Before joining the tech industry, the terms Docker, containers, and Kubernetes often popped up in online tech discussions. They seemed important, yet the concepts remained vague. If you’re in a similar position, curious about what these terms mean and why they are so prevalent, this guide is for you. We’ll explore containers and Docker from the ground up, starting with the fundamental question: What Is A Docker Container?

Understanding Docker Containers

What Exactly is a Container?

At its core, a container is an isolated environment where applications and their dependencies can run. Think of it as a lightweight, portable, and executable package that includes everything an application needs to run – code, runtime, system tools, libraries, and settings. This isolated environment is often referred to as a “sandbox.”

This isolation is key to understanding what a Docker container truly is. It ensures that an application running in a container is separated from the underlying host system and other containers. This separation brings several crucial advantages.

Why are Docker Containers Transformative?

Docker containers have revolutionized software development and deployment due to their numerous benefits. Let’s explore some of the most significant advantages:

Portability: Imagine shipping a physical container. It doesn’t matter what kind of truck or ship carries it; the contents remain secure and intact. Docker containers offer the same portability for applications. Because a container encapsulates all its dependencies, it becomes independent of the underlying infrastructure. This means you can run a Docker container on virtually any system – your laptop, a server in your data center, or a cloud environment – without worrying about compatibility issues. This “write once, run anywhere” capability is a game-changer for developers.

Consistency: Building upon portability, consistency ensures that your application behaves identically regardless of where it’s deployed. The isolated environment provided by Docker guarantees that the application experiences the same runtime environment across development, testing, and production stages. This eliminates the frustrating “it works on my machine” problem. You can be confident that if your application runs correctly in a Docker container locally, it will run just as reliably when deployed to a cloud server.

Speed of Deployment: The portability and consistency of Docker containers drastically accelerate deployment times. Since containers are self-contained and environment-agnostic, you bypass the lengthy process of configuring specific environments for each deployment. If an application is containerized, deploying it becomes as simple as running the container on the target system. This speed is crucial in fast-paced development cycles and for quickly scaling applications to meet user demand.

Docker Containers vs. Virtual Machines: Key Differences

Containers are often compared to virtual machines (VMs), as both technologies provide isolated environments. However, they achieve isolation through fundamentally different approaches, leading to significant performance and efficiency variations. Understanding these differences is crucial to grasping what is a Docker container and its unique advantages.

Virtual Machines: Hardware Virtualization Explained

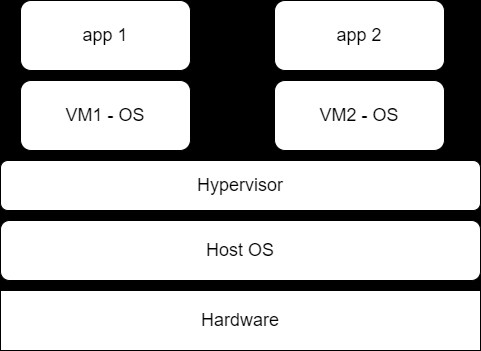

A virtual machine emulates an entire computer system. Each VM functions as a complete, independent machine within a host machine. This is achieved by virtualizing the host machine’s hardware – CPU, memory, storage, etc. A software layer called a hypervisor sits between the VMs and the host hardware, managing and allocating virtualized resources to each VM.

As depicted in the previous diagram, the hypervisor is the central component in VM architecture. It handles the complex task of abstracting the physical hardware and presenting it as virtual hardware to each VM. Each VM then runs its own operating system (OS) on this virtual hardware, alongside the applications it hosts.

While VMs provide strong isolation, this hardware virtualization approach introduces significant overhead. Each VM carries the burden of a full-fledged operating system, consuming substantial resources like storage (often tens of gigabytes per VM) and processing power. Booting up a VM can be time-consuming due to the OS initialization process.

Docker Containers: OS-Level Virtualization for Efficiency

Docker containers, in contrast, take an OS-level virtualization approach. Instead of virtualizing hardware, containers virtualize the operating system itself. This means containers share the host OS kernel. They don’t need to include a separate OS for each container, making them significantly lighter and more resource-efficient than VMs.

In the Docker ecosystem, the Docker daemon plays a role analogous to the hypervisor in VMs. It manages containers and acts as an intermediary between the containers and the host OS. However, because containers leverage the host OS kernel, the Docker daemon introduces far less overhead compared to a hypervisor. This lighter architecture is a core reason why containers are faster to start, consume fewer resources, and achieve higher density on the same hardware compared to VMs.

Lightweight vs. Heavyweight: Performance and Resources

The fundamental difference in virtualization approach translates to significant performance and resource utilization disparities. VMs, with their full OS virtualization, are considered heavyweight. They consume considerable disk space, memory, and CPU cycles, even when idle. Starting a VM involves booting up an entire OS, which can take minutes.

Docker containers, with OS-level virtualization, are lightweight. They share the host OS kernel and only package the application and its essential dependencies. This results in smaller container images (often megabytes in size), faster startup times (seconds), and significantly lower resource consumption. You can run many more containers than VMs on the same hardware, maximizing resource utilization and reducing infrastructure costs.

The efficiency of containers stems from avoiding redundancy. VMs often duplicate OS functionalities already present in the host OS. Containers, on the other hand, leverage the host OS and only include the bare minimum required for the application to run in isolation. This clever “misdirection” creates a virtual view of the OS for each container, containing only the necessary components, without the overhead of a complete OS installation.

Diving into Docker: The Platform

Docker is the leading containerization platform that provides the tools to build, deploy, and manage containerized applications. While alternatives like Podman exist, Docker has become synonymous with containerization for many. Docker is open-source and free to use, with a commercial version offered by Docker Inc. It primarily interacts through a command-line interface (CLI), enabling users to perform all platform operations.

Docker Components: Daemon, Client, and Hub

Understanding the key components of Docker is essential to working with containers effectively.

- Docker Daemon: This is the background service that runs on the host machine. It’s the core of Docker, responsible for listening to API requests from the Docker client, managing Docker images, and handling the building, running, and distribution of containers. The daemon is the process that interacts directly with the operating system.

- Docker Client: This is the command-line tool (CLI) that users interact with to send commands to the Docker daemon. You use the Docker client to build images, run containers, and manage your Docker environment. Other types of clients, like graphical user interfaces (GUIs), also exist.

- Docker Hub: This is a public registry of Docker images, a vast repository containing a wide array of pre-built images. Think of it as an app store for container images. You can find official images maintained by Docker and the community, as well as user-created images. Docker Hub simplifies sharing and distributing container images. You can also create your private registries to manage your organization’s images.

Essential Docker Terminology: Images, Containers, Dockerfile

Navigating the Docker world requires understanding some key terms:

- Images: Docker images are the blueprints for containers. They are read-only templates that contain the instructions for creating a container. Images encapsulate the application code, runtime environment, libraries, and dependencies. They are built from a Dockerfile.

- Containers: Containers are runtime instances of Docker images. When you run an image, Docker creates a container. Containers are the actual running environments where your applications execute. Multiple containers can be created from the same image.

- Dockerfile: A Dockerfile is a text file containing a set of instructions for building a Docker image. It specifies the base image, application code to copy, dependencies to install, and the command to run when the container starts. The Dockerfile defines the steps to create a reproducible and consistent container image.

Within Docker images, further terminology helps categorize and understand their origins:

- Base Images: These are images that form the foundation for other images. They have no parent image and typically represent a minimal operating system (e.g., Ubuntu, Alpine Linux).

- Child Images: These images are built upon base images, adding layers of functionality. Most Docker images you create will be child images, extending base images with your application and its specific requirements.

- Official Images: These are curated images officially maintained and supported by Docker or upstream projects. They are generally high-quality, secure, and well-documented. Official images often have single-word names (e.g.,

python,nginx,ubuntu). - User Images: These images are created and shared by Docker users. They can be built upon base or child images and provide specific applications or functionalities. User images are typically identified with a username prefix (e.g.,

username/image-name).

Hands-on Example: Building and Running a Docker Container

Let’s solidify our understanding of what a Docker container is by walking through a practical example of creating and running a simple “Hello World” application in a Docker container.

Creating a Docker Image: The Dockerfile

First, assume you have a Python script named hello-world.py that simply prints “Hello world!”. To containerize this application, you need to create a Dockerfile in the same directory as your script.

FROM python:3.9-slim-buster

WORKDIR /app

COPY . /app

CMD ["python", "hello-world.py"]Let’s break down the instructions in this Dockerfile:

FROM python:3.9-slim-buster: This line specifies the base image for your image. Here, we’re using the officialpython:3.9-slim-busterimage from Docker Hub, which provides a Python 3.9 environment based on Debian Buster.WORKDIR /app: This sets the working directory inside the container to/app. Subsequent commands will be executed within this directory.COPY . /app: This instruction copies all files from the current directory on your host machine (where the Dockerfile is located) to the/appdirectory inside the container. This includes yourhello-world.pyscript.CMD ["python", "hello-world.py"]: This defines the command to be executed when the container starts. Here, it instructs Docker to run thehello-world.pyscript using the Python interpreter.

Building and Running Your First Container

Once you have your Dockerfile, you can build a Docker image using the docker build command:

docker build -t hello-world-app:v1 .docker build: This is the Docker command to build an image.-t hello-world-app:v1: The-tflag tags your image with a name (hello-world-app) and a tag (v1). Tagging helps version your images..: The.specifies the build context, which is the current directory containing the Dockerfile.

Docker will process the Dockerfile instructions, pulling the base image (if not already present), copying files, and configuring the image layers. After the build completes, you can list your locally available images using docker images.

Now, run a container from your newly built image using docker run:

docker run hello-world-app:v1You should see “Hello world!” printed in your terminal. The container executed the command defined in the Dockerfile (CMD ["python", "hello-world.py"]), printed the output, and then exited. Containers are ephemeral; they run as long as the main process is active.

To interact with a running container, you can use the -it flags for interactive mode and a pseudo-TTY, and specify a shell like bash:

docker run -it hello-world-app:v1 bashThis command starts a container and drops you into a bash shell inside the container’s isolated environment. You can then explore the container’s file system, run commands, and further interact with your application.

To stop a running container, use docker stop followed by the container ID (you can get the ID using docker ps):

docker stop <container_id>Running a Web Application in Docker

Let’s extend our example to a slightly more complex scenario: running a basic Flask web application in a Docker container. Assume you have a Flask app (app.py) and a requirements.txt file listing dependencies. You’ll need to modify the Dockerfile:

FROM python:3.9-slim-buster

WORKDIR /app

COPY requirements.txt requirements.txt

RUN pip install -r requirements.txt

COPY . /app

EXPOSE 5000

CMD ["python", "app.py"]Key changes in this Dockerfile:

COPY requirements.txt requirements.txt&RUN pip install -r requirements.txt: These lines copy therequirements.txtfile and usepipto install the Flask dependency inside the container during the image build process.EXPOSE 5000: This instruction exposes port 5000 on the container. Flask applications typically run on port 5000 by default.

Build a new image for your web app:

docker build -t flask-web-app:v1 .When running the container, you need to map the container’s port (5000) to a port on your host machine so you can access the web app from your browser. Use the -p flag for port mapping:

docker run -p 8888:5000 flask-web-app:v1-p 8888:5000: This maps port 8888 on your host machine to port 5000 inside the container.

Now, open your web browser and navigate to http://localhost:8888. You should see your Flask web application running in the Docker container.

Conclusion

Docker containers have become indispensable tools in modern software development and deployment workflows. Their portability, consistency, and efficiency streamline application delivery and management across diverse environments. Hopefully, this guide has provided a clear understanding of what a Docker container is, its benefits, and how to get started with Docker.

As you continue your journey with containers, exploring topics like container orchestration with Kubernetes, container security, and advanced Dockerfile techniques will further enhance your skills. The world of containerization is vast and constantly evolving, offering exciting possibilities for building and deploying applications in a scalable and reliable manner.

@lg_mooney | @endjin.com