In the realm of computing, the term “cache” (pronounced CASH) refers to a fundamental concept for optimizing performance. Whether it’s hardware or software, a cache acts as a temporary storage space designed to hold data for quicker access in a computing environment.

At its core, a cache is a small, but significantly faster and more expensive type of memory compared to main system memory. Its primary function is to boost the performance of systems by storing frequently or recently accessed data closer to the component that needs it. This cached data resides temporarily in an easily accessible storage medium, positioned locally to the cache client and distinctly separate from the primary, bulk storage. Caching is a widespread technique employed by various components, including the central processing unit (CPU), software applications, web browsers, and operating systems.

The necessity for cache arises from the inherent limitations of bulk or main storage to keep pace with the rapid demands of clients. By introducing a cache layer, data access times are dramatically reduced, minimizing latency and substantially improving input/output (I/O) operations. Given that almost all application workloads are heavily reliant on I/O operations, the strategic use of caching becomes a cornerstone for enhancing overall application performance and responsiveness.

How Does a Cache System Work?

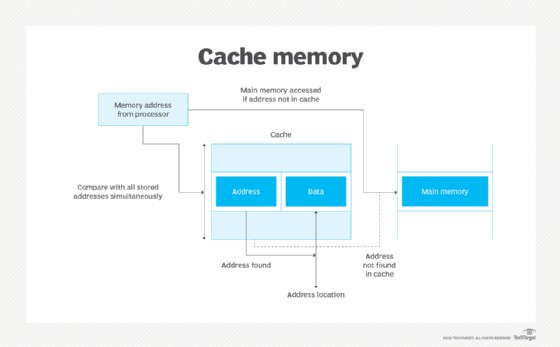

The operation of a cache is based on a simple yet effective principle. When a cache client needs to access data, the first step is to check the cache itself. If the requested data is found within the cache, this scenario is known as a cache hit. The efficiency of a cache is often quantified by the cache hit rate or ratio, which represents the percentage of data access attempts that result in a cache hit. A higher cache hit rate signifies a more effective cache in improving performance.

Conversely, if the data is not present in the cache, it’s termed a cache miss. In the event of a cache miss, the system retrieves the requested data from the main memory. Crucially, a copy of this retrieved data is then placed into the cache. This pre-emptive caching ensures that subsequent requests for the same data are more likely to result in a cache hit.

The mechanisms governing how data is managed within the cache, including how new data is added and what data is evicted to make space, are dictated by sophisticated caching algorithms, cache protocols, and predefined system policies. These elements work in concert to optimize cache performance and ensure efficient data management.

Web browsers, such as Chrome, Firefox, and Safari, exemplify the practical application of caching through browser caching. This feature significantly enhances the user experience when accessing frequently visited webpages. When you visit a webpage, the various files required to display the page (like images, scripts, and stylesheets) are stored in a dedicated cache within your computer’s storage for that specific browser.

Upon revisiting the same webpage, the browser efficiently retrieves the majority of these files from its local cache rather than re-downloading them from the web server. This process, known as read cache, allows the browser to access data from the cache at a much faster rate than re-reading from the webpage. This drastically reduces page load times and improves browsing speed.

Cache is undeniably vital for performance enhancement due to its ability to provide rapid data access and reduce the load on main memory and servers.

Cache memory, in particular, is a critical hardware component, often integrated directly into the CPU or embedded within a chip on the system board. In modern computers, increasing cache memory typically involves upgrading the system board and CPU to newer generations. However, older system boards might offer expansion slots for increasing cache memory.

Common Applications of Caches

Caches are versatile and used across various computing domains, employing both hardware and software components to store temporary files and data.

CPU cache stands out as a prominent example of hardware cache. It’s a small, high-speed memory unit located directly on the computer’s processor. This cache stores frequently or recently used basic computer instructions, enabling the CPU to access them much faster than retrieving them from main memory. This significantly accelerates processing speeds and overall system responsiveness.

Beyond hardware, numerous applications and software programs also utilize their own caches. This application cache serves to temporarily store application-specific data, files, or instructions. This local storage allows for rapid retrieval, improving the application’s performance and reducing reliance on slower storage mediums.

Web browsers provide an excellent illustration of application caching in action. As previously mentioned, browsers incorporate caches to store information from past browsing sessions. This cached data is then readily available for subsequent sessions, leading to faster loading times for frequently visited websites and resources. For instance, re-watching a YouTube video becomes quicker as the browser can retrieve it from its cache, where it was saved during the previous viewing session.

Beyond web browsers, numerous other software types benefit from caching mechanisms, including:

- Operating Systems: OS caches improve file access and application loading times.

- Databases: Database caches accelerate query speeds by storing frequently accessed data in memory.

- Content Delivery Networks (CDNs): CDNs use caches to store website content geographically closer to users, reducing latency and improving website loading speeds globally.

- DNS Servers: DNS caches store mappings between domain names and IP addresses, speeding up website lookups.

Benefits of Implementing Caches

The adoption of caching technologies brings forth a multitude of advantages, significantly impacting system performance and user experience:

- Enhanced Performance: The most prominent benefit of caching is the substantial improvement in speed and performance. By storing frequently accessed data closer to the processing unit, caches minimize data retrieval times. For example, browser caches drastically reduce webpage loading times, and database caches accelerate data retrieval for queries.

- Offline Functionality: Caches enable applications to operate, at least partially, even without a persistent internet connection. By providing quick access to recently or frequently used data stored in the cache, applications can maintain core functionalities in offline scenarios. However, it’s important to note that cache may not provide access to all application features in offline mode.

- Resource Efficiency: Beyond speed gains, caching contributes to resource conservation in physical devices. Faster data access from cache reduces the strain on system resources, leading to benefits like reduced battery consumption in mobile devices. By minimizing the need to access slower main storage, caches optimize overall resource utilization.

Drawbacks and Considerations of Caching

While caches offer numerous benefits, it’s important to be aware of potential drawbacks and issues associated with their use:

- Data Corruption: Cache data can become corrupted, rendering the stored information unusable. Data corruption in caches can lead to application instability, browser crashes, or the display of incorrect data. Regularly clearing the cache can sometimes mitigate corruption issues.

- Performance Degradation (If Oversized): Caches are typically designed as small, temporary memory stores. If a cache grows excessively large, it can paradoxically lead to performance degradation. An overly large cache can consume memory resources that other applications might require, potentially negatively impacting overall system performance. Efficient cache management and appropriate sizing are crucial.

- Outdated Information: A common issue with caching is the potential to display outdated information. If a cached version of a website or application is used after updates have been deployed online, users might see an older, inaccurate version. This is especially problematic for dynamic content that changes frequently. While not an issue for static content, it can lead to discrepancies and user confusion with dynamic websites or applications.

Cache Algorithms: Managing Cache Data

Cache algorithms are sets of instructions that govern cache maintenance and data management. These algorithms determine which data is stored in the cache and when data is evicted to make room for new information. Several common cache algorithms exist, each with its own approach to optimizing cache performance:

- Least Frequently Used (LFU): The LFU algorithm tracks the access frequency of each item in the cache. When space is needed, the item that has been accessed the least number of times is removed first. LFU prioritizes keeping frequently accessed items in the cache.

- Least Recently Used (LRU): LRU keeps track of the last time each item in the cache was accessed. Items that have been accessed more recently are kept closer to the “top” of the cache. When the cache reaches its capacity, the items that have not been accessed for the longest period (least recently used) are removed. LRU assumes that recently accessed data is more likely to be accessed again soon.

- Most Recently Used (MRU): In contrast to LRU, the MRU algorithm removes the most recently accessed items first. MRU is less common but can be effective in specific scenarios where older data is more likely to be reused.

Cache Policies: Defining Cache Behavior

Cache policies are a set of rules that dictate how the cache operates, particularly concerning write operations and data consistency. Different policies offer varying trade-offs between performance, data integrity, and latency:

- Write-Around Cache: In a write-around cache policy, write operations are directed directly to the main storage, bypassing the cache entirely. This prevents the cache from being overwhelmed by large volumes of write I/O. However, a disadvantage is that the data is not cached until it is subsequently read from storage. This can result in slower read operations for recently written data until it’s read once.

- Write-Through Cache: With a write-through cache, data is written simultaneously to both the cache and the main storage. The primary advantage of write-through is that newly written data is always present in the cache, ensuring fast read access. A drawback is that write operations are not considered complete until the data is written to both the cache and primary storage, potentially introducing latency into write operations.

- Write-Back Cache: Similar to write-through, write-back cache directs all write operations initially to the cache. However, in write-back, the write operation is considered complete as soon as the data is cached. The data is then copied from the cache to the main storage at a later point. This approach results in low latency for both read and write operations. The primary downside is that data in the cache is vulnerable to loss until it is successfully committed to the main storage. Data loss could occur in the event of a system crash or power failure before the cached data is written to permanent storage.

Different caching policies are chosen based on the specific application requirements and the desired balance between performance, data reliability, and consistency.

Clearing Cache: When and Why

Clearing the cache is a common maintenance task that frees up valuable memory space on a device. Browser caches, in particular, consume memory to store downloaded web files. Clearing the cache can resolve various user issues, such as:

- Application Crashes or Loading Problems: A full cache can lead to applications crashing or failing to load correctly due to insufficient memory.

- Incorrect Webpage Loading: Old cache data can contain outdated information and files, causing webpages to display incorrectly or not load at all. Clearing the cache forces the browser to fetch the latest versions of webpage resources.

- Privacy Concerns: Browser caches can store personal information, such as passwords and browsing history. Clearing the cache can enhance user privacy by removing this stored data.

While clearing the cache can resolve issues and free up space, it’s generally recommended to clear it periodically, not daily. Frequent cache clearing can negate the performance benefits of caching due to these factors:

- Loss of Quick Access: Clearing the cache eliminates the advantage of fast file access for frequently used resources.

- Redundant Maintenance: Caches are designed to automatically manage their contents, deleting some files on their own as needed. Manual clearing too frequently can be unnecessary.

- Cache Rebuild: After clearing, the system will immediately begin caching new files, quickly filling up the space again.

How to Clear Browser Cache

Browser caches are the most commonly cleared caches by end-users. In most web browsers, clearing the cache is easily accessible through settings or preferences menus. These menus also typically include options for managing privacy settings, cookies, and browsing history.

Many desktop web browsers offer keyboard shortcuts for quick access to these settings menus:

- Windows: Ctrl + Shift + Delete

- macOS: Command + Shift + Delete

These shortcuts will open the settings menu, which may vary slightly depending on the specific browser.

Here’s a step-by-step guide on how to clear the cache in Google Chrome:

- Press Ctrl + Shift + Delete (Windows) or Command + Shift + Delete (Mac).

- In the settings menu that appears, navigate to the “Privacy and security” section.

- Click on Clear browsing data.

- In the “Time range” dropdown menu, choose a time period. To clear the entire cache, select All time.

- Ensure the checkbox next to Cached images and files is selected.

- Click the Clear data button.

The process is similar for other browsers like Firefox, Safari, and Edge, typically found within their respective settings or history menus.

Different Types of Caches

Caching is a broad concept applied in various forms throughout computing systems. Here are some key types of caches:

- Cache Memory: Often referred to as CPU cache, this is a type of RAM that a microprocessor can access much faster than regular system RAM. It’s typically integrated directly with the CPU and is used to cache frequently accessed instructions and data. RAM cache is faster than disk-based cache, but CPU cache is even faster due to its proximity to the CPU.

-

Cache Server (Proxy Cache): A dedicated network server or service that stores webpages and other internet content locally. Cache servers, also known as proxy caches, reduce network traffic and improve website loading speeds for users by serving cached content instead of fetching it from the origin server every time.

-

CPU Cache: As mentioned earlier, CPU cache is a small, fast memory unit located on the CPU itself. It operates at the CPU’s speed, significantly faster than system bus speed and RAM. CPU caches are crucial for reducing CPU wait times and accelerating processing.

-

Disk Cache: Disk caches store recently read data and sometimes adjacent data blocks that are likely to be accessed soon. Some disk caches utilize algorithms to identify and cache “hot blocks” – frequently read storage blocks – automatically improving disk I/O performance.

-

Flash Cache (SSD Caching): Flash cache, also known as solid-state drive (SSD) caching, leverages NAND flash memory chips to temporarily store data. Flash caches offer faster data access compared to traditional hard disk drives or backing storage, boosting application and system performance.

-

Persistent Cache: Persistent cache is a type of storage capacity where cached data is preserved even in the event of system reboots or crashes. This is typically achieved through battery backups or by flushing data to battery-backed dynamic RAM (DRAM) to protect against data loss and ensure data availability after interruptions.

-

RAM Cache: RAM cache usually consists of permanent memory on the motherboard and memory modules installed in dedicated slots. Access to RAM cache is provided through the mainboard bus. While slower than CPU cache, RAM cache is significantly faster than magnetic media, offering faster response times for I/O operations.

-

Translation Lookaside Buffer (TLB): TLB is a specialized memory cache that stores recent translations of virtual memory addresses to physical addresses. TLBs are used to speed up virtual memory operations by reducing the need for repeated address translations, enhancing system performance in virtual memory environments.

Further learning: Explore how to configure web browsers to mitigate web cache poisoning, a significant security threat.