What Is Cuda? It’s a parallel computing architecture that enables significant acceleration for a wide range of applications. At WHAT.EDU.VN, we understand you’re looking for answers, and we’re here to provide them in a clear, accessible way. Unlock the potential of parallel processing and discover how it can revolutionize computation, boosting performance and efficiency. Dive into the world of CUDA and explore GPU acceleration today.

1. Understanding CUDA: A Deep Dive

CUDA, which stands for Compute Unified Device Architecture, is a parallel computing platform and programming model developed by NVIDIA. Launched in 2006, it allows software to use a graphics processing unit (GPU) for general purpose processing.

1.1. What Makes CUDA Unique?

Unlike traditional CPUs (Central Processing Units), GPUs have a massively parallel architecture consisting of thousands of smaller, more efficient cores designed for handling multiple tasks simultaneously. CUDA unlocks this capability, enabling developers to write programs that harness the GPU’s processing power for applications beyond graphics rendering. It’s not just a programming language or API; it’s an ecosystem.

1.2. How CUDA Works

CUDA works by providing a set of extensions to popular programming languages like C, C++, and Fortran. These extensions allow developers to define kernels, which are functions that execute in parallel across multiple GPU cores.

Here’s a simplified breakdown:

- CPU vs. GPU: The CPU handles tasks sequentially, while the GPU handles many tasks in parallel.

- Kernels: Defined by the programmer to be executed on the GPU.

- Memory Management: CUDA handles the transfer of data between CPU and GPU memory.

- Parallel Execution: The GPU executes the kernel across thousands of threads simultaneously.

1.3. The CUDA Ecosystem

The CUDA ecosystem includes:

- CUDA Toolkit: Contains compilers, libraries, and tools for developing CUDA applications.

- CUDA Runtime: Manages the execution of CUDA programs on the GPU.

- CUDA Libraries: Provide optimized routines for common tasks like linear algebra, signal processing, and image processing.

- Developer Tools: Debugging, profiling, and optimization tools to improve CUDA application performance.

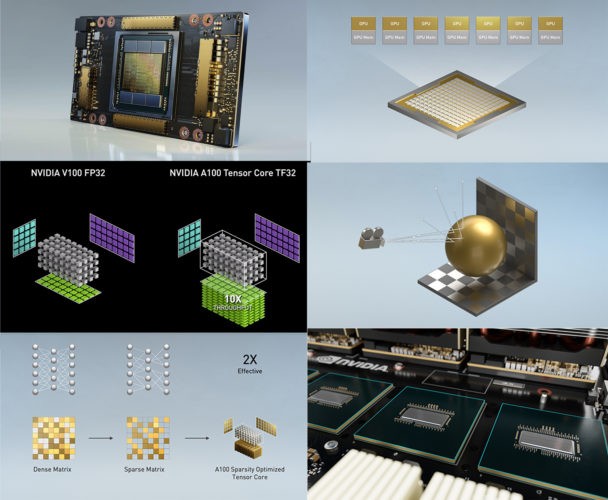

NVIDIA Ampere GPU architecture showcasing MIG, Tensor Cores, RT Cores, structural sparsity, and NVLink

NVIDIA Ampere GPU architecture showcasing MIG, Tensor Cores, RT Cores, structural sparsity, and NVLink

2. Applications of CUDA: Where is CUDA Used?

CUDA’s parallel processing capabilities make it ideal for a variety of computationally intensive applications across diverse fields. Here are some key areas where CUDA is widely used:

2.1. High-Performance Computing (HPC)

CUDA plays a crucial role in HPC, enabling scientists and researchers to tackle complex simulations and data analysis tasks faster and more efficiently.

- Scientific Simulations: Weather forecasting, climate modeling, molecular dynamics simulations, and computational fluid dynamics benefit significantly from CUDA’s acceleration.

- Data Analysis: Processing large datasets in fields like genomics, astrophysics, and finance becomes more manageable.

2.2. Deep Learning and Artificial Intelligence

CUDA has become the foundation for deep learning, accelerating the training and inference of neural networks.

- Image Recognition: Training convolutional neural networks (CNNs) for image classification and object detection.

- Natural Language Processing (NLP): Accelerating recurrent neural networks (RNNs) and transformers for language translation and text generation.

- Recommendation Systems: Building and training models for personalized recommendations in e-commerce and entertainment.

2.3. Medical Imaging

CUDA enables faster and more accurate medical image processing, aiding in diagnosis and treatment planning.

- CT Scans and MRIs: Accelerating the reconstruction and analysis of 3D medical images.

- Image Segmentation: Automating the process of identifying and delineating organs and tissues in medical images.

- Radiation Therapy Planning: Optimizing radiation dose distributions for cancer treatment.

2.4. Finance

Financial institutions use CUDA to accelerate quantitative analysis, risk management, and algorithmic trading.

- Monte Carlo Simulations: Pricing complex financial derivatives and assessing risk.

- Algorithmic Trading: Executing trading strategies with low latency and high frequency.

- Fraud Detection: Identifying fraudulent transactions in real-time.

2.5. Automotive

CUDA is essential for developing advanced driver-assistance systems (ADAS) and autonomous driving technologies.

- Sensor Fusion: Processing data from cameras, radar, and lidar sensors to create a comprehensive view of the vehicle’s surroundings.

- Path Planning: Computing optimal driving routes in real-time.

- Object Detection and Tracking: Identifying and tracking other vehicles, pedestrians, and obstacles.

2.6. Other Applications

- Video Processing: Encoding, decoding, and editing high-resolution video.

- Cryptography: Accelerating encryption and decryption algorithms.

- Gaming: Enhancing graphics rendering and physics simulations.

3. Benefits of Using CUDA

Leveraging CUDA offers numerous advantages, making it a powerful tool for developers and researchers.

3.1. Performance Gains

The most significant benefit of CUDA is the potential for dramatic performance improvements compared to CPU-based solutions. By offloading computationally intensive tasks to the GPU, applications can achieve speedups of up to 100x or more.

3.2. Scalability

CUDA applications can scale seamlessly to take advantage of multiple GPUs in a single system or across a cluster. This allows users to tackle even larger and more complex problems.

3.3. Flexibility

CUDA supports a wide range of programming languages and libraries, giving developers the flexibility to choose the tools that best suit their needs.

3.4. Mature Ecosystem

With over a decade of development and a large community of users, CUDA has a mature ecosystem with extensive documentation, libraries, and tools.

3.5. Wide Adoption

CUDA is widely adopted across various industries and research communities, making it a valuable skill for developers and researchers.

4. Getting Started with CUDA Programming

If you’re interested in exploring CUDA programming, here’s a step-by-step guide to get you started.

4.1. Prerequisites

- NVIDIA GPU: You’ll need a computer with an NVIDIA GPU that supports CUDA.

- Operating System: CUDA supports Windows, Linux, and macOS.

- CUDA Toolkit: Download and install the CUDA Toolkit from the NVIDIA Developer website.

- Compiler: You’ll need a C/C++ compiler like GCC or Visual Studio.

4.2. Installation

- Download CUDA Toolkit: Visit the NVIDIA Developer website and download the CUDA Toolkit for your operating system.

- Install the Toolkit: Follow the installation instructions provided by NVIDIA. This will install the CUDA compiler (nvcc), libraries, and tools.

- Verify Installation: After installation, verify that CUDA is properly installed by running the

nvcc --versioncommand in your terminal.

4.3. Writing Your First CUDA Program

Here’s a simple example of a CUDA program that adds two vectors:

#include <iostream>

#include <cuda_runtime.h>

// Kernel function to add two vectors

__global__ void addVectors(float *a, float *b, float *c, int n) {

int i = blockIdx.x * blockDim.x + threadIdx.x;

if (i < n) {

c[i] = a[i] + b[i];

}

}

int main() {

int n = 1024; // Size of the vectors

float *h_a, *h_b, *h_c; // Host (CPU) memory

float *d_a, *d_b, *d_c; // Device (GPU) memory

// Allocate host memory

h_a = new float[n];

h_b = new float[n];

h_c = new float[n];

// Initialize host vectors

for (int i = 0; i < n; i++) {

h_a[i] = i;

h_b[i] = i * 2;

}

// Allocate device memory

cudaMalloc((void**)&d_a, n * sizeof(float));

cudaMalloc((void**)&d_b, n * sizeof(float));

cudaMalloc((void**)&d_c, n * sizeof(float));

// Copy data from host to device

cudaMemcpy(d_a, h_a, n * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_b, h_b, n * sizeof(float), cudaMemcpyHostToDevice);

// Define the grid and block dimensions

int blockSize = 256;

int numBlocks = (n + blockSize - 1) / blockSize;

// Launch the kernel

addVectors<<<numBlocks, blockSize>>>(d_a, d_b, d_c, n);

// Copy the result from device to host

cudaMemcpy(h_c, d_c, n * sizeof(float), cudaMemcpyDeviceToHost);

// Print the result

for (int i = 0; i < 10; i++) {

std::cout << h_a[i] << " + " << h_b[i] << " = " << h_c[i] << std::endl;

}

// Free device memory

cudaFree(d_a);

cudaFree(d_b);

cudaFree(d_c);

// Free host memory

delete[] h_a;

delete[] h_b;

delete[] h_c;

return 0;

}4.4. Compiling and Running the Program

-

Save the code: Save the code as

vectorAdd.cu. -

Compile: Use the CUDA compiler

nvccto compile the code:nvcc vectorAdd.cu -o vectorAdd -

Run: Execute the compiled program:

./vectorAdd

This will print the sum of the first 10 elements of the two vectors.

5. CUDA-X: Extending CUDA Capabilities

NVIDIA CUDA-X is a collection of libraries, tools, and technologies built on top of CUDA that boost performance across multiple application domains from AI to HPC.

5.1. Key CUDA-X Libraries

- cuBLAS: Basic Linear Algebra Subroutines for performing common linear algebra operations on the GPU.

- cuFFT: Fast Fourier Transform library for accelerating signal processing applications.

- cuSPARSE: Library for performing sparse matrix operations on the GPU.

- cuDNN: Deep Neural Network library for accelerating deep learning training and inference.

- NVGRAPH: Graph analytics library for performing graph algorithms on the GPU.

5.2. Benefits of CUDA-X

- Optimized Performance: CUDA-X libraries are highly optimized for NVIDIA GPUs, providing significant performance gains.

- Ease of Use: The libraries are designed to be easy to use, allowing developers to quickly integrate GPU acceleration into their applications.

- Wide Range of Applications: CUDA-X covers a wide range of application domains, from AI to HPC.

6. Frequently Asked Questions (FAQs) About CUDA

To further clarify common questions and concerns about CUDA, here is a list of FAQs:

| Question | Answer |

|---|---|

| What is the difference between CUDA and OpenCL? | CUDA is NVIDIA’s proprietary parallel computing platform and programming model, while OpenCL is an open standard. CUDA is generally more optimized for NVIDIA GPUs, while OpenCL aims for portability across different hardware platforms. |

| Do I need a powerful GPU to use CUDA? | While a more powerful GPU will provide better performance, you can still use CUDA with a less powerful GPU. The key is to optimize your code for the specific hardware you’re using. |

| Can I use CUDA with Python? | Yes, you can use CUDA with Python using libraries like PyCUDA and Numba. These libraries provide a Python interface to the CUDA API, allowing you to write and execute CUDA code from Python. |

| Is CUDA only for NVIDIA GPUs? | Yes, CUDA is specifically designed for NVIDIA GPUs. |

| What are the alternatives to CUDA? | Alternatives to CUDA include OpenCL, SYCL, and vendor-specific APIs like Intel’s oneAPI. |

| How can I optimize my CUDA code? | Optimizing CUDA code involves techniques like minimizing data transfers between CPU and GPU, maximizing parallel execution, and using shared memory effectively. NVIDIA provides profiling tools to help identify performance bottlenecks in your code. |

| What is the role of CUDA in machine learning? | CUDA plays a vital role in machine learning by accelerating the training and inference of neural networks. Libraries like cuDNN provide optimized routines for deep learning operations, allowing researchers and developers to train complex models faster. |

| How does CUDA support parallel processing? | CUDA supports parallel processing by allowing developers to write code that executes concurrently on multiple GPU cores. The CUDA programming model provides abstractions for managing threads, blocks, and grids, enabling developers to express parallelism at different levels of granularity. |

| What are the limitations of CUDA? | Some limitations of CUDA include its dependency on NVIDIA GPUs and the complexity of writing and optimizing CUDA code. |

| Where can I find CUDA tutorials and resources? | NVIDIA provides extensive documentation, tutorials, and examples on its developer website. There are also numerous online courses, books, and community forums dedicated to CUDA programming. |

7. The Future of CUDA

CUDA continues to evolve with new features and optimizations to take advantage of the latest GPU architectures. NVIDIA is committed to supporting and expanding the CUDA ecosystem, ensuring its continued relevance in the future of computing.

7.1. Key Trends

- Increased Integration with AI Frameworks: CUDA is becoming increasingly integrated with popular AI frameworks like TensorFlow and PyTorch, making it easier for developers to build and deploy AI applications.

- Support for New Hardware Features: CUDA is constantly updated to support new hardware features in NVIDIA GPUs, such as Tensor Cores and NVLink.

- Expansion into New Application Domains: CUDA is expanding into new application domains like data science, genomics, and robotics.

7.2. NVIDIA’s Commitment

NVIDIA is committed to providing developers with the tools and resources they need to succeed with CUDA. This includes:

- Ongoing Development of the CUDA Toolkit: NVIDIA continues to invest in the CUDA Toolkit, adding new features and optimizations.

- Extensive Documentation and Support: NVIDIA provides extensive documentation, tutorials, and support resources for CUDA developers.

- Collaboration with the Open Source Community: NVIDIA collaborates with the open-source community to integrate CUDA into popular libraries and frameworks.

8. CUDA vs. Other Parallel Computing Frameworks

While CUDA is a powerful and widely used parallel computing framework, it’s essential to understand how it compares to other options. Here’s a brief overview of some key alternatives:

| Framework | Developer | Key Features | Pros | Cons |

|---|---|---|---|---|

| CUDA | NVIDIA | – Optimized for NVIDIA GPUs – Extensive library support – Mature ecosystem | – Excellent performance on NVIDIA GPUs – Wide adoption in AI and HPC – Comprehensive tools and documentation | – NVIDIA-specific – Can be complex to optimize |

| OpenCL | Khronos Group | – Open standard – Cross-platform support – Wide range of hardware support | – Portable across different hardware platforms – Open standard – No vendor lock-in | – Can be less optimized than CUDA for specific hardware – More complex programming model |

| SYCL | Khronos Group | – Higher-level programming model than OpenCL – C++ based – Targets heterogeneous computing | – More modern and easier to use than OpenCL – C++ based – Vendor-agnostic | – Relatively new compared to CUDA and OpenCL – Less mature ecosystem |

| oneAPI | Intel | – Cross-architecture programming model – Supports CPUs, GPUs, and FPGAs – Based on SYCL | – Targets a wide range of hardware – Backed by Intel – Based on a modern programming model | – Relatively new – Performance may vary across different hardware platforms |

Each framework has its strengths and weaknesses, and the best choice depends on the specific requirements of your application and the hardware you’re targeting.

9. Practical Examples of CUDA in Action

To illustrate the power and versatility of CUDA, let’s look at some real-world examples of how it’s being used in different industries:

9.1. Healthcare: Drug Discovery

Pharmaceutical companies are using CUDA to accelerate drug discovery by simulating the interactions between drug candidates and target proteins. These simulations require massive computational power, and CUDA enables researchers to screen millions of potential drug candidates in a fraction of the time compared to traditional CPU-based methods. This accelerates the process of identifying promising new treatments for diseases like cancer and Alzheimer’s.

9.2. Automotive: Autonomous Driving

Self-driving cars rely on CUDA to process data from multiple sensors, including cameras, radar, and lidar, in real-time. CUDA enables the vehicle to perceive its surroundings, identify objects, and make decisions about how to navigate safely. The low latency and high throughput of CUDA are essential for ensuring the safety and reliability of autonomous driving systems.

9.3. Retail: Personalized Recommendations

E-commerce companies use CUDA to analyze customer purchase data and browsing history to generate personalized product recommendations. These recommendations are powered by machine learning models that are trained on massive datasets. CUDA accelerates the training and inference of these models, allowing retailers to provide more relevant and timely recommendations to their customers, increasing sales and customer satisfaction.

9.4. Scientific Research: Climate Modeling

Climate scientists use CUDA to run complex climate models that simulate the Earth’s atmosphere and oceans. These models are used to study the effects of climate change and to predict future climate scenarios. CUDA enables researchers to run these models at higher resolutions and with more complex physics, providing a more accurate understanding of the Earth’s climate system.

These are just a few examples of how CUDA is being used to solve real-world problems across a wide range of industries. As GPU technology continues to advance, we can expect to see even more innovative applications of CUDA in the future.

10. Contributing to the CUDA Community

The CUDA community is vibrant and supportive, offering numerous opportunities for developers to connect, collaborate, and learn from each other. Here are some ways you can get involved:

10.1. NVIDIA Developer Forums

The NVIDIA Developer Forums are a great place to ask questions, share your knowledge, and connect with other CUDA developers. You can find answers to common problems, discuss best practices, and get feedback on your code.

10.2. Open Source Projects

Contribute to open-source projects that use CUDA. This is a great way to improve your skills, learn from experienced developers, and give back to the community. Many libraries and frameworks that use CUDA are open source, such as TensorFlow, PyTorch, and OpenCV.

10.3. CUDA Meetups and Conferences

Attend CUDA meetups and conferences to network with other developers, learn about the latest advances in CUDA technology, and hear from industry experts. NVIDIA hosts several events throughout the year, such as the GPU Technology Conference (GTC), which is a premier event for developers working with GPUs.

10.4. Write Blog Posts and Tutorials

Share your knowledge and experience by writing blog posts and tutorials about CUDA programming. This is a great way to help others learn CUDA and to establish yourself as an expert in the field. You can write about specific CUDA techniques, share your experiences with different CUDA libraries, or provide step-by-step instructions for solving common problems.

10.5. Report Bugs and Suggest Features

Help improve CUDA by reporting bugs and suggesting new features to NVIDIA. This is a valuable way to contribute to the development of CUDA and to ensure that it meets the needs of the community. You can report bugs and suggest features through the NVIDIA Developer website.

By getting involved in the CUDA community, you can connect with other developers, learn new skills, and contribute to the advancement of CUDA technology.

11. Best Practices for CUDA Development

To write efficient and maintainable CUDA code, it’s essential to follow best practices. Here are some key guidelines to keep in mind:

11.1. Minimize Data Transfers

Data transfers between the CPU and GPU are slow, so minimize them as much as possible. Try to perform as much computation as possible on the GPU and avoid transferring data back and forth unnecessarily.

11.2. Maximize Parallelism

Take full advantage of the GPU’s parallel architecture by designing your code to execute concurrently on multiple cores. Use techniques like thread-level parallelism, block-level parallelism, and grid-level parallelism to maximize the utilization of the GPU.

11.3. Use Shared Memory

Shared memory is a fast on-chip memory that can be accessed by all threads within a block. Use shared memory to share data between threads and to reduce the number of accesses to global memory, which is slower.

11.4. Optimize Memory Access Patterns

Access memory in a coalesced manner, where threads in a warp access consecutive memory locations. This allows the GPU to fetch the data in a single transaction, improving performance.

11.5. Use CUDA Libraries

Take advantage of the optimized CUDA libraries for common tasks like linear algebra, signal processing, and image processing. These libraries are highly optimized for NVIDIA GPUs and can provide significant performance gains compared to writing your own code.

11.6. Profile Your Code

Use the NVIDIA profiling tools to identify performance bottlenecks in your code. The profiler can help you identify areas where you can optimize your code to improve performance.

11.7. Write Readable and Maintainable Code

Write code that is easy to read and understand. Use meaningful variable names, add comments to explain your code, and follow a consistent coding style. This will make it easier to maintain your code and to collaborate with other developers.

By following these best practices, you can write efficient and maintainable CUDA code that takes full advantage of the GPU’s parallel architecture.

12. Common Mistakes to Avoid in CUDA Programming

Even experienced programmers can make mistakes when working with CUDA. Being aware of these common pitfalls can save you time and effort.

12.1. Ignoring Memory Coalescing

Non-coalesced memory access patterns can drastically reduce performance. Ensure threads within a warp access contiguous memory locations to maximize memory throughput.

12.2. Excessive CPU-GPU Data Transfers

Frequent data transfers between the CPU and GPU can negate the performance benefits of GPU acceleration. Minimize data transfers by performing as much computation as possible on the GPU.

12.3. Incorrect Grid and Block Dimensions

Choosing inappropriate grid and block dimensions can lead to underutilization of GPU resources. Experiment with different configurations to find the optimal settings for your application.

12.4. Race Conditions in Shared Memory

When using shared memory, be careful to avoid race conditions. Use proper synchronization mechanisms, such as __syncthreads(), to ensure data consistency.

12.5. Launching Too Many Threads

Launching an excessive number of threads can lead to resource exhaustion and performance degradation. Limit the number of threads to the available resources on the GPU.

12.6. Ignoring Error Handling

CUDA function calls can fail for various reasons. Always check for errors and handle them appropriately to prevent unexpected behavior.

12.7. Over-reliance on Global Memory

Global memory access is slow compared to shared memory. Whenever possible, use shared memory to cache data and reduce the number of accesses to global memory.

12.8. Neglecting to Profile Code

Profiling is essential for identifying performance bottlenecks in CUDA code. Use the NVIDIA profiling tools to pinpoint areas that need optimization.

By avoiding these common mistakes, you can write more efficient and robust CUDA code.

13. Resources for Learning CUDA

Learning CUDA can seem daunting, but there are many excellent resources available to help you on your journey.

13.1. NVIDIA Developer Website

The NVIDIA Developer Website is the primary resource for all things CUDA. It offers comprehensive documentation, tutorials, code samples, and more.

13.2. CUDA Toolkit Documentation

The CUDA Toolkit comes with extensive documentation that covers all aspects of the CUDA API. This documentation is an invaluable resource for understanding the intricacies of CUDA programming.

13.3. Online Courses

Several online platforms offer courses on CUDA programming. Coursera, Udacity, and edX have courses that range from introductory to advanced levels.

13.4. Books

There are many excellent books on CUDA programming. Some popular titles include “CUDA by Example” by Sanders and Kandrot, and “Programming Massively Parallel Processors” by Kirk and Hwu.

13.5. Community Forums

The NVIDIA Developer Forums are a great place to ask questions, share your knowledge, and connect with other CUDA developers.

13.6. Open Source Projects

Studying the source code of open-source projects that use CUDA can provide valuable insights into real-world CUDA programming techniques.

13.7. NVIDIA Developer Blog

The NVIDIA Developer Blog features articles on the latest advances in CUDA technology, as well as tips and tricks for CUDA programming.

By utilizing these resources, you can gain a solid understanding of CUDA and become a proficient CUDA programmer.

14. Staying Up-to-Date with CUDA Developments

The world of parallel computing is constantly evolving, so it’s important to stay up-to-date with the latest developments in CUDA.

14.1. NVIDIA Developer Newsletters

Subscribe to the NVIDIA Developer Newsletters to receive updates on new CUDA features, tools, and events.

14.2. NVIDIA Developer Blog

Regularly read the NVIDIA Developer Blog to learn about the latest CUDA techniques, best practices, and case studies.

14.3. CUDA Release Notes

Review the CUDA Release Notes for each new version of the CUDA Toolkit to understand the changes and improvements.

14.4. Conferences and Events

Attend conferences and events such as the GPU Technology Conference (GTC) to learn about the latest advances in CUDA and network with other developers.

14.5. Research Papers

Read research papers on parallel computing and GPU acceleration to stay informed about the latest theoretical and practical developments.

14.6. Social Media

Follow NVIDIA and other experts in the field on social media platforms like Twitter and LinkedIn to stay up-to-date on the latest news and trends.

By staying informed about the latest developments in CUDA, you can ensure that your skills and knowledge remain relevant and competitive.

15. Need Answers? Ask WHAT.EDU.VN

Still have questions about CUDA or anything else? At WHAT.EDU.VN, we’re dedicated to providing you with the answers you need, quickly and without cost. Don’t struggle with unanswered questions. Visit WHAT.EDU.VN today and ask your question for free. Our community of experts is ready to help.

Address: 888 Question City Plaza, Seattle, WA 98101, United States

Whatsapp: +1 (206) 555-7890

Website: what.edu.vn