Are you looking to understand Retrieval Augmented Generation (RAG) and how it enhances AI models? At WHAT.EDU.VN, we provide clear answers and insights into complex topics like RAG, ensuring you grasp the fundamentals and its applications. This innovative approach significantly improves the accuracy and reliability of generative AI, making it a valuable tool for various industries.

1. What Exactly Is Retrieval Augmented Generation (RAG)?

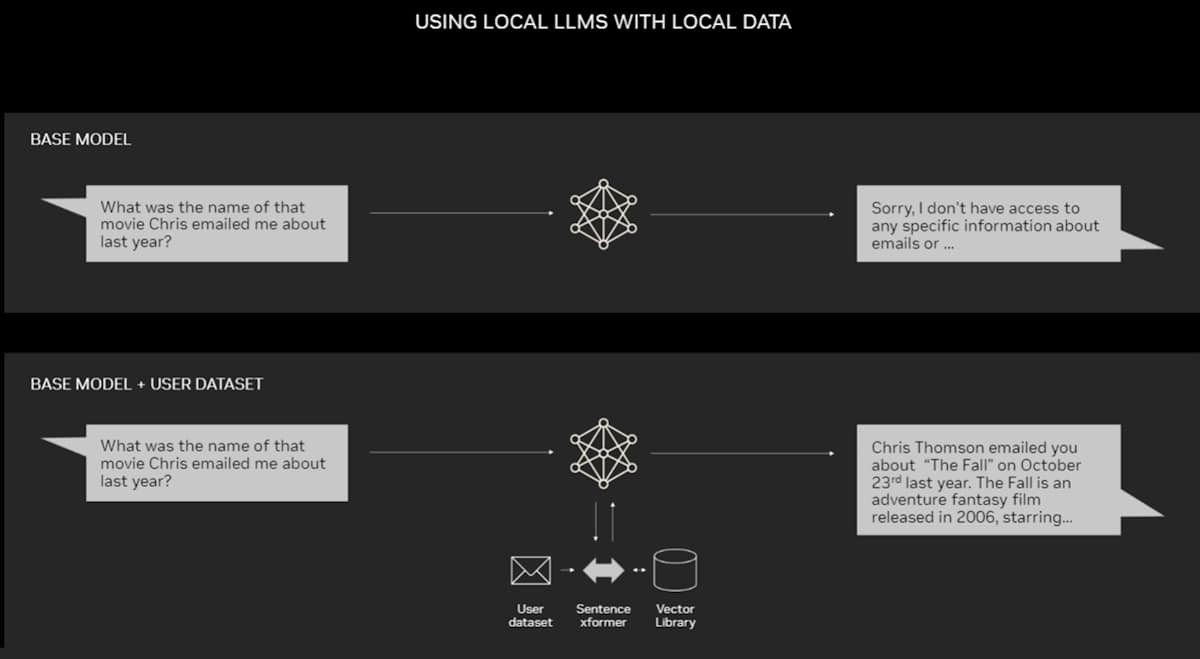

Retrieval-Augmented Generation (RAG) is a technique used to improve the accuracy and reliability of generative AI models by incorporating information from external, relevant data sources. This method addresses a key limitation of Large Language Models (LLMs), which, while proficient in general language patterns, often lack the specific knowledge required for in-depth or specialized queries.

In essence, RAG bridges the gap between an LLM’s broad understanding and the need for detailed, up-to-date information. By fetching data from external knowledge bases, RAG ensures that the AI model’s responses are not only contextually relevant but also grounded in factual, verifiable sources.

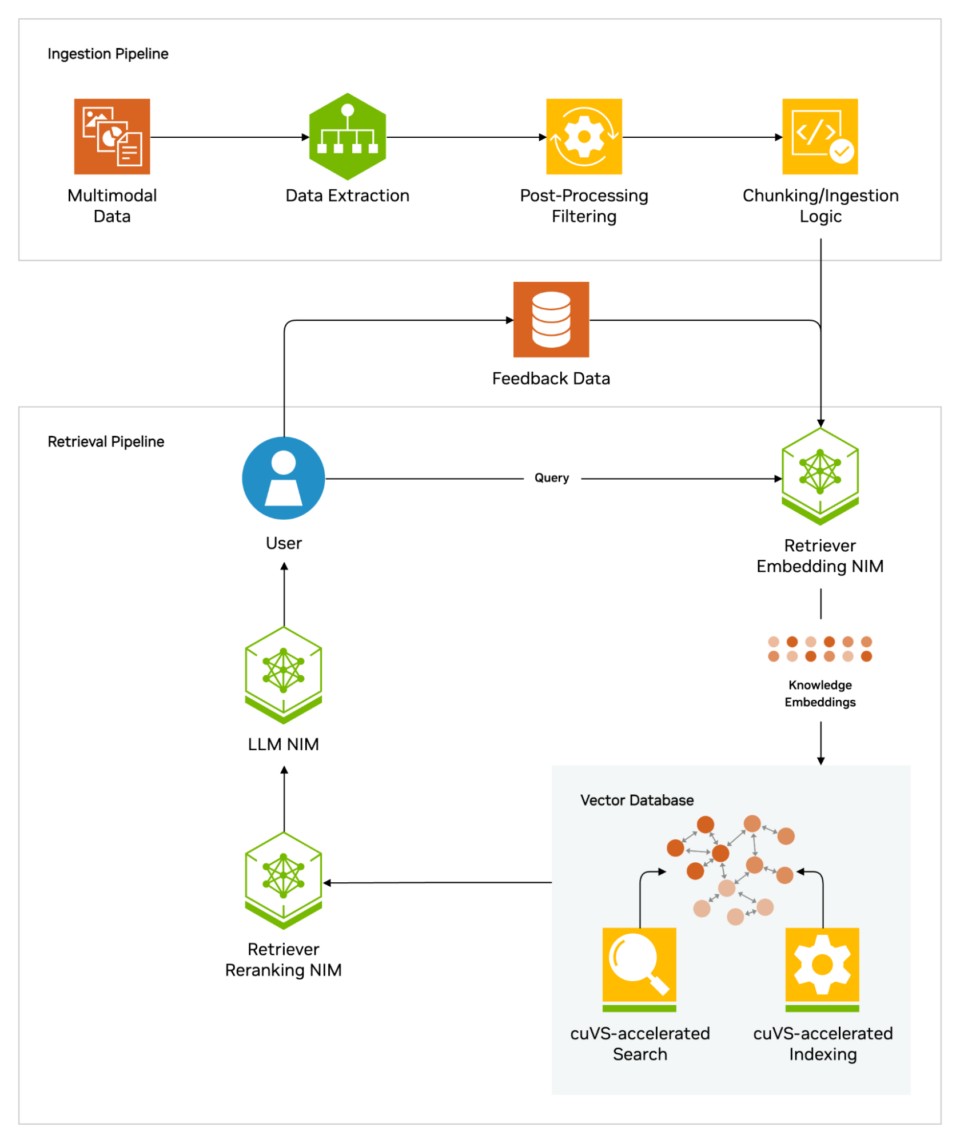

1.1. How RAG Works: A Simplified Explanation

Imagine you’re asking a knowledgeable friend a question. If they don’t know the answer offhand, they might consult a book or search the internet to find the information. RAG works similarly:

- User Query: A user poses a question to the AI model.

- Query Embedding: The question is converted into a numerical representation (an embedding or vector) that the machine can understand.

- Information Retrieval: This numerical representation is used to search a vector database containing indexed knowledge bases for relevant information.

- Data Retrieval: The system retrieves the most relevant data from the knowledge base.

- Augmented Generation: The LLM combines the retrieved information with its own knowledge to generate a comprehensive and accurate answer for the user, often citing the sources used.

1.2. Key Components of RAG

- Large Language Model (LLM): The core AI model responsible for generating responses.

- Embedding Model: Converts text into numerical vectors for efficient searching.

- Vector Database: Stores and indexes knowledge bases in a machine-readable format.

- Knowledge Base: The external source of information, which can include documents, databases, and other data repositories.

2. Why Is RAG Important for Generative AI?

RAG is crucial because it addresses several limitations of standalone LLMs:

2.1. Enhancing Accuracy and Reducing Hallucinations

LLMs are trained on vast amounts of data, but they can sometimes generate plausible but incorrect answers, a phenomenon known as “hallucination.” RAG mitigates this by grounding the model’s responses in verifiable facts from external sources.

2.2. Providing Up-to-Date Information

LLMs have a fixed knowledge base based on their training data, which can quickly become outdated. RAG allows models to access the most current information, ensuring that responses are accurate and relevant.

2.3. Increasing Transparency and Trust

By providing sources for its answers, RAG increases the transparency of AI models. Users can verify the information and assess the credibility of the responses, building trust in the system.

2.4. Enabling Customization and Specialization

RAG allows businesses and organizations to tailor AI models to their specific needs by connecting them to proprietary knowledge bases. This enables specialized applications in areas such as customer support, employee training, and technical documentation.

3. What Are the Real-World Applications of RAG?

The versatility of RAG makes it applicable across various industries and use cases:

3.1. Healthcare

A generative AI model enhanced with a medical index can assist doctors and nurses by providing quick access to relevant medical information, research findings, and treatment options.

3.2. Finance

Financial analysts can benefit from RAG-powered assistants that provide real-time market data, economic indicators, and company-specific information.

3.3. Customer Support

Businesses can use RAG to create AI-powered chatbots that provide accurate and up-to-date answers to customer inquiries, improving customer satisfaction and reducing support costs.

3.4. Education

Students and educators can use RAG to access a wealth of information from textbooks, research papers, and other educational resources, facilitating learning and research.

3.5. Legal

Lawyers and legal professionals can leverage RAG to quickly find relevant case law, statutes, and legal documents, streamlining legal research and analysis.

4. What Are the Benefits of Using RAG?

RAG offers numerous advantages over traditional AI models and other methods of enhancing LLMs:

4.1. Improved Accuracy

By grounding responses in factual data, RAG significantly reduces the risk of inaccurate or misleading information.

4.2. Enhanced Reliability

RAG ensures that AI models provide consistent and dependable answers, even when faced with complex or nuanced queries.

4.3. Increased Efficiency

RAG streamlines the process of accessing and integrating information, saving time and resources.

4.4. Greater Flexibility

RAG can be easily adapted to different knowledge bases and use cases, making it a versatile solution for a wide range of applications.

4.5. Reduced Costs

RAG is often more cost-effective than retraining an LLM with additional datasets, as it allows users to “hot-swap” new sources on the fly.

5. What is the History of RAG?

The concept of RAG has roots in the early days of information retrieval and question-answering systems.

5.1. Early Question-Answering Systems (1970s)

Researchers began developing systems that used natural language processing (NLP) to access text in specific domains, such as baseball statistics.

5.2. Ask Jeeves (Mid-1990s)

The Ask Jeeves service, now Ask.com, popularized question answering with its natural language interface.

5.3. IBM Watson (2011)

IBM’s Watson gained fame by defeating human champions on the Jeopardy! game show, demonstrating the power of AI in question answering.

5.4. The Seminal 2020 Paper

Patrick Lewis and his colleagues at Facebook AI Research (now Meta AI), University College London, and New York University published a paper that coined the term “retrieval-augmented generation” and outlined the technique’s potential for enhancing generative AI models.

6. What are the Key Considerations When Implementing RAG?

Implementing RAG effectively requires careful consideration of several factors:

6.1. Data Source Selection

Choosing the right knowledge base is crucial for ensuring the accuracy and relevance of the AI model’s responses.

6.2. Indexing Strategy

Developing an efficient indexing strategy is essential for quickly retrieving the most relevant information from the knowledge base.

6.3. Embedding Model Selection

Selecting an appropriate embedding model is important for accurately converting text into numerical vectors.

6.4. Integration with LLM

Seamlessly integrating RAG with the LLM is necessary for generating coherent and informative responses.

6.5. Monitoring and Evaluation

Regularly monitoring and evaluating the performance of the RAG system is crucial for identifying areas for improvement.

7. How Does RAG Compare to Fine-Tuning?

Both RAG and fine-tuning are methods for improving the performance of LLMs, but they differ in their approach and use cases:

7.1. RAG

- Approach: Augments the LLM’s knowledge by retrieving information from external sources at the time of query.

- Use Cases: Suitable for tasks that require up-to-date information, access to specialized knowledge, or transparency in the sources used.

- Advantages: More flexible, cost-effective, and easier to implement than fine-tuning.

- Disadvantages: May be slower than fine-tuning, as it requires retrieving information from external sources.

7.2. Fine-Tuning

- Approach: Retrains the LLM on a specific dataset to adapt it to a particular task or domain.

- Use Cases: Suitable for tasks that require the LLM to learn new skills or adapt to a specific style of writing.

- Advantages: Can result in faster response times and better performance on specific tasks.

- Disadvantages: More expensive and time-consuming than RAG, and requires a large, high-quality dataset.

8. What Are the Tools and Technologies Used in RAG?

Several tools and technologies are commonly used in RAG implementations:

8.1. LangChain

An open-source library that provides a framework for chaining together LLMs, embedding models, and knowledge bases.

8.2. Vector Databases

Specialized databases designed for storing and querying vector embeddings, such as Pinecone and Weaviate.

8.3. Embedding Models

Models that convert text into numerical vectors, such as OpenAI’s text embedding models and Sentence Transformers.

8.4. NVIDIA NeMo Retriever

A tool that provides large-scale retrieval accuracy.

8.5. NVIDIA NIM

Microservices for simplifying secure, high-performance AI deployment.

9. What Are the Future Trends in RAG?

The field of RAG is rapidly evolving, with several exciting trends on the horizon:

9.1. Agentic AI

The integration of RAG with agentic AI, where LLMs and knowledge bases are dynamically orchestrated to create autonomous assistants.

9.2. Improved Embedding Models

The development of more accurate and efficient embedding models that can capture the nuances of language.

9.3. Enhanced Vector Databases

The emergence of vector databases with improved scalability, performance, and features.

9.4. RAG on the Edge

The deployment of RAG on edge devices, such as laptops and smartphones, enabling real-time access to information without relying on cloud connectivity.

9.5. Multi-Modal RAG

Extending RAG to incorporate multiple modalities, such as images, audio, and video, to provide more comprehensive and context-aware responses.

10. How Can I Get Started with RAG?

If you’re interested in exploring RAG, here are a few steps you can take to get started:

10.1. Experiment with Open-Source Tools

Explore open-source libraries like LangChain and experiment with different LLMs, embedding models, and vector databases.

10.2. Utilize Cloud-Based Services

Take advantage of cloud-based RAG services offered by providers like AWS, Google Cloud, and Microsoft Azure.

10.3. Explore NVIDIA Resources

Check out the NVIDIA AI Blueprint for RAG and the NVIDIA LaunchPad lab for building AI chatbots with RAG.

10.4. Join the Community

Engage with the RAG community through online forums, conferences, and workshops.

10.5. Seek Expert Advice

Consult with AI experts and consultants who can provide guidance and support for your RAG projects.

FAQ: Understanding Retrieval Augmented Generation (RAG)

| Question | Answer |

|---|---|

| What is the main purpose of RAG? | To enhance the accuracy and reliability of generative AI models by providing them with access to external knowledge sources. |

| How does RAG differ from traditional LLMs? | Traditional LLMs rely solely on their internal knowledge, while RAG models can access and incorporate information from external sources in real-time. |

| What types of data sources can RAG use? | RAG can use a variety of data sources, including documents, databases, websites, and APIs. |

| What are the key benefits of using RAG? | Improved accuracy, enhanced reliability, increased efficiency, greater flexibility, and reduced costs. |

| In what industries is RAG commonly used? | Healthcare, finance, customer support, education, and legal. |

| What are some popular tools for implementing RAG? | LangChain, Pinecone, Weaviate, OpenAI’s text embedding models, and Sentence Transformers. |

| What are the future trends in RAG? | Agentic AI, improved embedding models, enhanced vector databases, RAG on the edge, and multi-modal RAG. |

| How does RAG help reduce hallucinations in AI? | By grounding the model’s responses in verifiable facts from external sources, RAG reduces the risk of inaccurate or misleading information. |

| Is RAG difficult to implement? | While implementing RAG effectively requires careful planning and execution, several open-source tools and cloud-based services make it more accessible to developers and organizations. |

| Can RAG be used with any LLM? | RAG can be used with nearly any LLM, making it a versatile solution for enhancing generative AI models. According to the 2020 paper by Lewis and colleagues, it is a general-purpose fine-tuning recipe. |

Retrieval Augmented Generation (RAG) represents a significant advancement in the field of generative AI, offering a powerful approach to enhancing the accuracy, reliability, and versatility of AI models. By connecting LLMs to external knowledge bases, RAG enables a wide range of applications across various industries, from healthcare and finance to customer support and education.

Ready to explore the potential of RAG and other AI technologies? Visit WHAT.EDU.VN today to ask your questions and receive expert answers for free. Our community of experts is here to help you navigate the complex world of AI and unlock its transformative power. Contact us at 888 Question City Plaza, Seattle, WA 98101, United States, or reach out via Whatsapp at +1 (206) 555-7890. Check out our website at what.edu.vn for more information! Discover the power of AI-driven insights and knowledge augmentation now.